Decoding A/B Testing Statistics in Colab

The Curiosity Trigger

"Why do some A/B tests fail silently?" Fired up Google Colab, built demo conversion datasets, chased statistical truth.

Experiment Playground

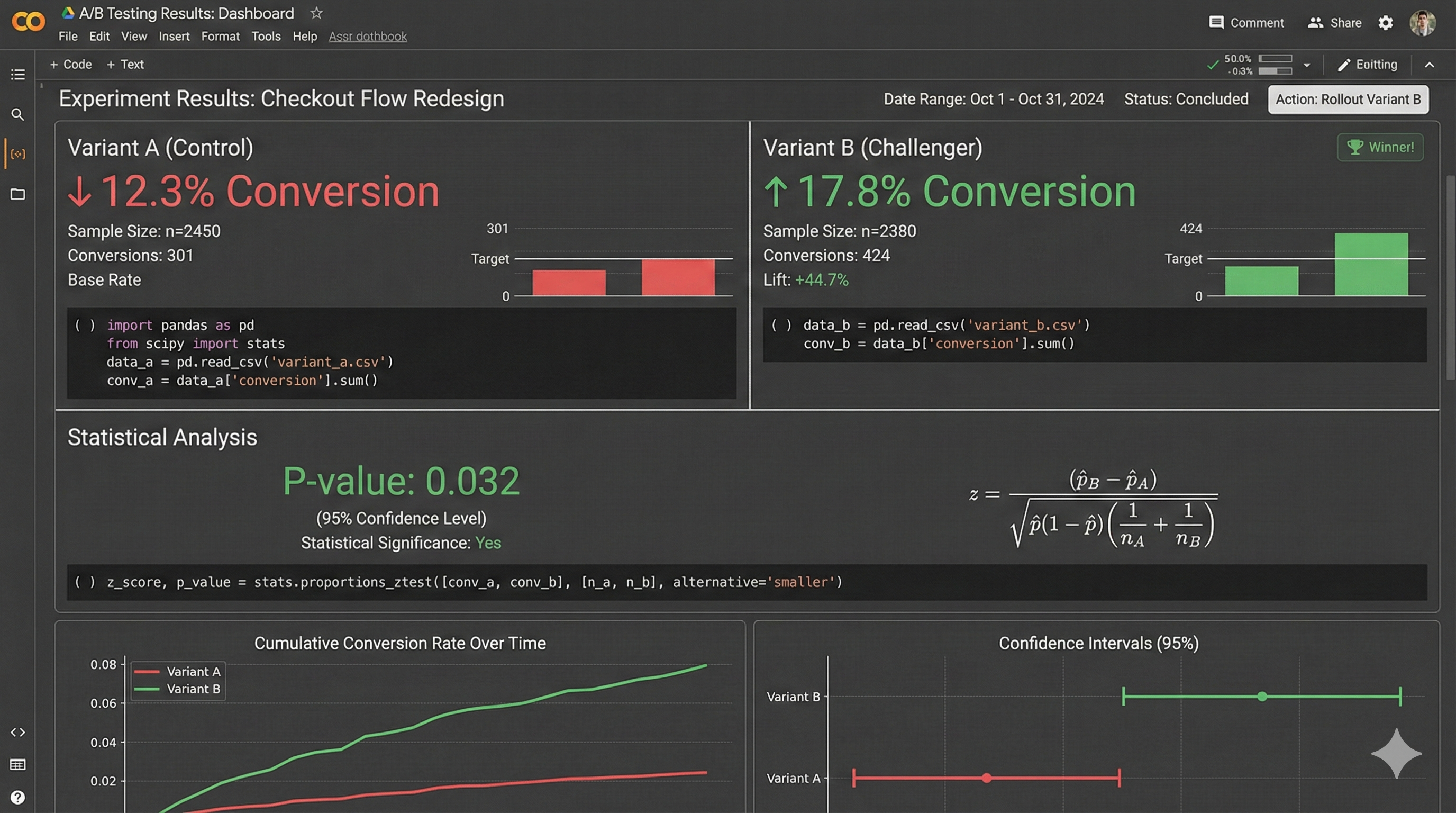

Z-Test Bootcamp: Two-proportion tests showed p-values shrinking with sample size. 5% lift needed 10x samples for significance.

Quality Traps: Sample-ratio mismatch killed tests. Chi-square flagged imbalances instantly. One-sided vs two-sided p-values? Totally different stories.

The "Now I Get It" Moment

When Variant B beat A at 95% confidence with perfect sample ratios? That's experimentation science - not guesswork.

Learning Stack

- Google Colab: Stats laboratory

- Python: Z-tests + chi-square

- Datasets: SaaS conversions

Core Insight

A/B success = rigorous design + quality controls. Statistics don't lie when you ask the right questions.